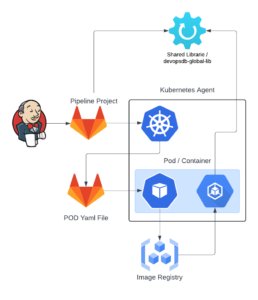

In this post, I will show another step in using PODs/Containers in pipelines.

Bringing together previous posts about using pipeline scripts in Jenkins – Pipeline source from GitLab., Jenkins – Shared Libraries, Jenkins – Agents in Kubernetes. and Jenkins – Pod Template at Pipeline runtime. The idea is to have the Pods created at runtime, as in the previous post, but with the scripts in separate files from the pipeline scripts.

So far, there isn’t much difficulty or much difference from the previous post. But as I start to show the use with Shared Libraries and GitLab, there is an important behavior that I noticed, which I need to describe.

Due to a detail in how I was creating the POD for this example, I realized that the methods called from Shared Libraries are not run within the Containers indicated in the Stage/Script. They are only run on jnlp container. As a result, some behaviors change a little.

What I was doing was creating a SharedVolume between PODs to exemplify how to share information between Containers. So, when I asked to create the folders and checkout any file in GitLab, it failed, as there were no folders or permissions in the path I was using /mnt/pipeline/*.

It is not necessary to create a SharedVolume between the Containers to share information, they already use the same Workspace and it works in the same way, but it is interesting to share this behavior.

See the example below, in the description of the POD the mounts, there is /home/jenkins/agent from workspace-volume (rw) among all Containers. In other words, if you do this operation of creating the folder, checkout, etc. in the workspace, it works perfectly.

kubectl describe pods infrastructure-pipelines-tests-test-file-pod-59-qg64v-fw6-tf7j6 --namespace jenkins-devopsdb

Containers:

container-1:

Container ID: containerd://96f26b2eb26a002868c579339944fc94612a66b6d6f2b618813bd0aa51b9a26a

Image: registry.devops-db.internal:5000/img-jenkins-devopsdb:2.0

Image ID: registry.devops-db.internal:5000/img-jenkins-devopsdb@sha256:76ca827b2ef7c85708864e0792a4d896c6320d9988be21b839a478f2eb1f2026

Port: <none>

Host Port: <none>

Command:

cat

State: Running

Started: Wed, 26 Jun 2024 22:39:02 +0100

Ready: True

Restart Count: 0

Environment:

CONTAINER_NAME: container-1

Mounts:

/home/jenkins/agent from workspace-volume (rw)

/mnt from shared-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-6rn4w (ro)

jnlp:

Container ID: containerd://7635c708368e668417d010116ba5f2e0003095533631eb12574e00a874228155

Image: jenkins/inbound-agent

Image ID: docker.io/jenkins/inbound-agent@sha256:131453e429e9a0e4e37584dc122346fd2fd9cc2b98b4cb0688f5ea9f7adcff5c

Port: <none>

Host Port: <none>

State: Running

Started: Wed, 26 Jun 2024 22:39:03 +0100

Ready: True

Restart Count: 0

Requests:

cpu: 100m

memory: 256Mi

Environment:

JENKINS_SECRET: 3a969b8d6778d575f3fbbddba9d63c7f5b92333e0b21fd8b19a935a06de55308

JENKINS_AGENT_NAME: infrastructure-pipelines-tests-test-file-pod-59-qg64v-fw6-tf7j6

REMOTING_OPTS: -noReconnectAfter 1d

JENKINS_NAME: infrastructure-pipelines-tests-test-file-pod-59-qg64v-fw6-tf7j6

JENKINS_AGENT_WORKDIR: /home/jenkins/agent

CONTAINER_NAME: jnlp

JENKINS_URL: http://jenkins.lab.devops-db.info/

Mounts:

/home/jenkins/agent from workspace-volume (rw)

/mnt from shared-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-6rn4w (ro)Using shared-volume.

But continuing in the situation of using another SharedVolume, I explicitly declared the jnlp container and added volumeMounts to it as well. With this, the methods are called in jnlp and reflected in the volumes and used by other containers.

The GitLab structure looked like this: https://github.com/faustobranco/devops-db/tree/master/infrastructure/pipelines/tests/file-pod

.

└── file-pod

├── pipeline.groovy

├── python-log.py

└── resources

└── pods

└── file-pod.yaml

We have the pipeline script (pipeline.groovy), any python script (just for example) and a file-pod.yaml file, with the POD/Containers manifest. The file is in a different folder than the pipeline, for example, it can be in any path, not necessarily in the root of the git project or together with the pipeline file.

Creating the pipeline in Jenkins does not change anything from any other pipeline that uses SCM as the script source:

Note that at the beginning of the pipeline, unlike the previous post, I do not have the POD declarations, but rather the path to the file (yamlFile parameter):

@Library('devopsdb-global-lib') _

import devopsdb.utilities.Utilities

def obj_Utilities = new Utilities(this)

pipeline {

agent {

kubernetes {

yamlFile 'file-pod/resources/pods/file-pod.yaml'

retries 2

}

}

options { timestamps ()

skipDefaultCheckout(true)

}

stages {

stage('Environment') {

steps {

container('container-1') {

script {

def str_folder = "/mnt/pipelines/resources"

def str_folderCheckout = "/pip"

obj_Utilities.CreateFolders(str_folder)

obj_Utilities.SparseCheckout('git@gitlab.devops-db.internal:infrastructure/pipelines/resources.git',

'master',

str_folderCheckout,

'usr-service-jenkins',

str_folder)

sh 'sleep 1s'

sh "PIP_CONFIG_FILE=${str_folder}${str_folderCheckout}/pip-devops.conf pip install devopsdb --break-system-packages"

}

}

}

}

stage('Script') {

steps {

container('container-1') {

script {

def str_folder = "/mnt/pipelines/python/log"

def str_folderCheckout = "/python-log"

obj_Utilities.CreateFolders(str_folder)

obj_Utilities.SparseCheckout('git@gitlab.devops-db.internal:infrastructure/pipelines/tests.git',

'master',

str_folderCheckout,

'usr-service-jenkins',

str_folder)

}

}

}

}

stage('Run') {

steps {

container('container-1') {

script {

def str_folder = "/mnt/pipelines/python/log"

def str_folderCheckout = "/python-log"

sh "python3 ${str_folder}${str_folderCheckout}/python-log.py"

}

}

}

}

stage('Cleanup') {

steps {

cleanWs deleteDirs: true, disableDeferredWipeout: true

}

}

}

}

What is important and different from previous posts is in this POD’s manifest, we explicitly indicate the creation of the jnlp container using the official Jenkins image:

apiVersion: v1

kind: Pod

spec:

containers:

- name: container-1

image: registry.devops-db.internal:5000/img-jenkins-devopsdb:2.0

env:

- name: CONTAINER_NAME

value: "container-1"

volumeMounts:

- name: shared-volume

mountPath: /mnt

command:

- cat

tty: true

- name: jnlp

image: jenkins/inbound-agent

env:

- name: CONTAINER_NAME

value: "jnlp"

volumeMounts:

- name: shared-volume

mountPath: /mnt

volumes:

- name: shared-volume

emptyDir: {}I purposely left the CONTAINER_NAME environment variable with the value jnlp in the container, as this made it much easier for me to debug this behavior.

See that I made a small modification to our Shared Library, more precisely to the folder creation method, so I was able to know which Container it was running in. https://github.com/faustobranco/devops-db/blob/master/infrastructure/pipelines/lib-utilities/src/devopsdb/utilities/Utilities.groovy

Anyway, I finally had the pipeline running successfully.

Using workspace-volume.

But let’s forget the SharedVolume and do the operation in the workspace using the workspace-volume, as it looks like: This example below is not on our GitHub.

pipeline.groovy:

@Library('devopsdb-global-lib') _

import devopsdb.utilities.Utilities

def obj_Utilities = new Utilities(this)

pipeline {

agent {

kubernetes {

yamlFile 'file-pod/resources/pods/file-pod.yaml'

retries 2

}

}

options { timestamps ()

skipDefaultCheckout(true)

}

stages {

stage('Environment') {

steps {

container('container-1') {

script {

def str_folder = "${env.WORKSPACE}/pipelines/resources/pip"

def str_folderCheckout = "/pip"

obj_Utilities.CreateFolders(str_folder)

obj_Utilities.SparseCheckout('git@gitlab.devops-db.internal:infrastructure/pipelines/resources.git',

'master',

str_folderCheckout,

'usr-service-jenkins',

str_folder)

sh 'sleep 1s'

sh "PIP_CONFIG_FILE=${str_folder}${str_folderCheckout}/pip-devops.conf pip install devopsdb --break-system-packages"

}

}

}

}

stage('Script') {

steps {

container('container-1') {

script {

def str_folder = "${env.WORKSPACE}/pipelines/python/log"

def str_folderCheckout = "/python-log"

obj_Utilities.CreateFolders(str_folder)

obj_Utilities.SparseCheckout('git@gitlab.devops-db.internal:infrastructure/pipelines/tests.git',

'master',

str_folderCheckout,

'usr-service-jenkins',

str_folder)

}

}

}

}

stage('Run') {

steps {

container('container-1') {

script {

def str_folder = "${env.WORKSPACE}/pipelines/python/log"

def str_folderCheckout = "/python-log"

sh "python3 ${str_folder}${str_folderCheckout}/python-log.py"

}

}

}

}

stage('Cleanup') {

steps {

cleanWs deleteDirs: true, disableDeferredWipeout: true

}

}

}

}

And the Manifest yaml file, without the jnlp Container.

apiVersion: v1

kind: Pod

spec:

containers:

- name: container-1

image: registry.devops-db.internal:5000/img-jenkins-devopsdb:2.0

env:

- name: CONTAINER_NAME

value: "container-1"

volumeMounts:

- name: shared-volume

mountPath: /mnt

command:

- cat

tty: true

volumes:

- name: shared-volume

emptyDir: {}And in the end, the Pipeline runs perfectly. The behavior is the same, the Shared Librarie continues to run in the jnlp container, but it is transparent in the execution of the pipeline because it uses native sharing. See this last piece of the execution log:

23:00:30 [INFO] [CreateFolders] Structure created: /home/jenkins/agent/workspace/infrastructure/pipelines/tests/test_file_pod/pipelines/python/log

[Pipeline] checkout

23:00:30 The recommended git tool is: NONE

23:00:30 using credential usr-service-jenkins

23:00:30 Warning: JENKINS-30600: special launcher org.csanchez.jenkins.plugins.kubernetes.pipeline.ContainerExecDecorator$1@292a197e; decorates RemoteLauncher[hudson.remoting.Channel@3ea651da:JNLP4-connect connection from 172.21.5.156/172.21.5.156:29621] will be ignored (a typical symptom is the Git executable not being run inside a designated container)

23:00:30 Cloning the remote Git repository

23:00:30 Using no checkout clone with sparse checkout.

23:00:31 Avoid second fetch

23:00:31 Checking out Revision 6f1e302a943c26e01f605c5fe0905fc4d8114ba8 (refs/remotes/origin/master)

23:00:31 Commit message: "[FEAT] - 2024-06-26 22:59:54"

23:00:30 Cloning repository git@gitlab.devops-db.internal:infrastructure/pipelines/tests.git

23:00:30 > git init /home/jenkins/agent/workspace/infrastructure/pipelines/tests/test_file_pod/pipelines/python/log # timeout=10

23:00:30 Fetching upstream changes from git@gitlab.devops-db.internal:infrastructure/pipelines/tests.git

23:00:30 > git --version # timeout=10

23:00:30 > git --version # 'git version 2.39.2'

23:00:30 using GIT_SSH to set credentials usr-service-jenkins

23:00:30 Verifying host key using known hosts file, will automatically accept unseen keys

23:00:30 > git fetch --tags --force --progress -- git@gitlab.devops-db.internal:infrastructure/pipelines/tests.git +refs/heads/*:refs/remotes/origin/* # timeout=10

23:00:31 > git config remote.origin.url git@gitlab.devops-db.internal:infrastructure/pipelines/tests.git # timeout=10

23:00:31 > git config --add remote.origin.fetch +refs/heads/*:refs/remotes/origin/* # timeout=10

23:00:31 > git rev-parse refs/remotes/origin/master^{commit} # timeout=10

23:00:31 > git config core.sparsecheckout # timeout=10

23:00:31 > git config core.sparsecheckout true # timeout=10

23:00:31 > git read-tree -mu HEAD # timeout=10

23:00:31 > git checkout -f 6f1e302a943c26e01f605c5fe0905fc4d8114ba8 # timeout=180

23:00:31 > git rev-list --no-walk a6e2497c1e5956caac3fdb0a8f124f3387b13f2c # timeout=10

23:00:31 Cleaning workspace

[Pipeline] }

23:00:31 > git rev-parse --verify HEAD # timeout=10

23:00:31 Resetting working tree

23:00:31 > git reset --hard # timeout=10

23:00:31 > git clean -fdx # timeout=10

[Pipeline] // script

[Pipeline] }

[Pipeline] // container

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Run)

[Pipeline] container

[Pipeline] {

[Pipeline] script

[Pipeline] {

[Pipeline] sh

23:00:32 + python3 /home/jenkins/agent/workspace/infrastructure/pipelines/tests/test_file_pod/pipelines/python/log/python-log/python-log.py

23:00:32 [22:00:32] - INFO - [TestPipeline]: Info Log in the test in python.

[Pipeline] }

[Pipeline] // script

[Pipeline] }

[Pipeline] // container

[Pipeline] }

[Pipeline] // stage

[Pipeline] stage

[Pipeline] { (Cleanup)

[Pipeline] cleanWs

23:00:32 [WS-CLEANUP] Deleting project workspace...

23:00:32 [WS-CLEANUP] Deferred wipeout is disabled by the job configuration...

23:00:32 [WS-CLEANUP] done

[Pipeline] }

[Pipeline] // stage

[Pipeline] }

[Pipeline] // timestamps

[Pipeline] }

[Pipeline] // node

[Pipeline] }

[Pipeline] // retry

[Pipeline] }

[Pipeline] // podTemplate

[Pipeline] End of Pipeline

Finished: SUCCESS